Happy Holidays Reader!,

As we get ready to actually take a couple days off for christsmas and get ready for the next year I wanted to give an update on our current file system journal research.

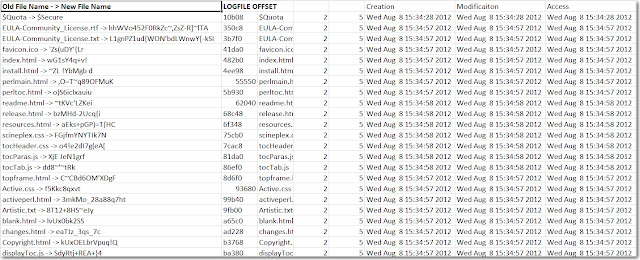

NTFS - Our NTFS Journal Parser has hit 1.0, we are still writing up a blog post to try to realy encapsulate what we want to say but you can get copy now by emailing me at dcowen@g-cpartners.com. If you sent a request prior and I missed it I apologize, just send it again.

EXT3/4 - We have a working EXT3 Journal parser now, and we can reassociate file names to deleted inodes. However, its not as straight forward as the NTFS Journal (as strange as that may sound) as the EXT3/4 Journal is a redo only journal meaning it does not store the ability to roll back a change just the ability to redo a change. We are able to recovery file names because when a change is recorded its not just the inode being changed thats written to the redo log, its the entire block that the inode is stored in! So we have alot of ancillary data that we have to parse through and then do a search through all those entries for directory entries pointing to the known deleted inode.

We've had to actually switch to a database backend for this to work as there is alot of data we have to make it through to get these changes. If you want in on the beta please email me dcowen@g-cpartners.com and we'll get you in the loop.

The only real change we've found between the ext3 and ext4 journals is a crc value inserted, but we'll look at it further as we move forward to make sure of that. I'm excited about ext4 as its the default file system for most android phones.

HFS+ - This is next on our list, we got a shiny new mac mini for testing on this and we are looking forward to it.

That's it, I hope you understand its been a busy year for so I haven't been able to write all the other cool stuff we want to share with you. In book news Computer Forensics, A Beginner's Guide is 1/3 the way through copy edit and the book should be out in the spring. I'm feeling pretty good about it and its aleady listed on the Amazon store (since its soooo delayed which is 100% my fault). I actually managed to snag www.learndfir.com for the book and I'll have tools, documents, images and this blog mirrored there. Also we are making tutorial videos for the cases in the book that will be put on our YouTube channel LearnForensics.

I hope to see you all at conferences this year, if you are looking for a speaker on advaned file system forensics please let me know!

Talk to you all in 2013!

As we get ready to actually take a couple days off for christsmas and get ready for the next year I wanted to give an update on our current file system journal research.

NTFS - Our NTFS Journal Parser has hit 1.0, we are still writing up a blog post to try to realy encapsulate what we want to say but you can get copy now by emailing me at dcowen@g-cpartners.com. If you sent a request prior and I missed it I apologize, just send it again.

EXT3/4 - We have a working EXT3 Journal parser now, and we can reassociate file names to deleted inodes. However, its not as straight forward as the NTFS Journal (as strange as that may sound) as the EXT3/4 Journal is a redo only journal meaning it does not store the ability to roll back a change just the ability to redo a change. We are able to recovery file names because when a change is recorded its not just the inode being changed thats written to the redo log, its the entire block that the inode is stored in! So we have alot of ancillary data that we have to parse through and then do a search through all those entries for directory entries pointing to the known deleted inode.

We've had to actually switch to a database backend for this to work as there is alot of data we have to make it through to get these changes. If you want in on the beta please email me dcowen@g-cpartners.com and we'll get you in the loop.

The only real change we've found between the ext3 and ext4 journals is a crc value inserted, but we'll look at it further as we move forward to make sure of that. I'm excited about ext4 as its the default file system for most android phones.

HFS+ - This is next on our list, we got a shiny new mac mini for testing on this and we are looking forward to it.

That's it, I hope you understand its been a busy year for so I haven't been able to write all the other cool stuff we want to share with you. In book news Computer Forensics, A Beginner's Guide is 1/3 the way through copy edit and the book should be out in the spring. I'm feeling pretty good about it and its aleady listed on the Amazon store (since its soooo delayed which is 100% my fault). I actually managed to snag www.learndfir.com for the book and I'll have tools, documents, images and this blog mirrored there. Also we are making tutorial videos for the cases in the book that will be put on our YouTube channel LearnForensics.

I hope to see you all at conferences this year, if you are looking for a speaker on advaned file system forensics please let me know!

Talk to you all in 2013!

Also Read: PFIC 2012 Slides & Bsides DFW