Hello Reader,

Today we have something special, a guest post from Hal Pomeranz. One of the best parts of sharing research and information is when others come back and extend their work for the benefit of us all. Today Hal Pomeranz has kindly not only shared back his work in extending the idea of recovering mlocate database's from unallocated space, he's written a tool to do so! I'll be testing Hal's tool on my test image and post those results tomorrow. In the mean time enjoy this very well written post!

If you want to contact Hal go to http://deer-run.com/~hal if you want train with Hal he is an excellent instructor with SANS http://www.sans.org/instructors/hal-pomeranz. Hal is an awesome forensicator and community resource who also is willing to 1099 to those of you like myself that run labs that are looking to extend our capabilities.

You can download the new tool has has made here: https://mega.co.nz/#!TsoTlSjR!BMz7BQqOhCeLGumWu51kaw4v_VFxd6UT3lyqu-ljUdc

With all that said, here is today's guest post from Hal.

Hal Pomeranz, Deer Run Associates

One of the nice things about our little DFIR community is

how researchers build off of each other’s work.

I put together a tool for

parsing mlocate.db files for

a case I was working on. David Cowen and

I had a conversation about it on his Forensic

Lunch. David had some questions about whether we could find previous copies

of the mlocate.db in unallocated

blocks, and wrote several

blog posts on the subject here on HECF blog. David’s work prompted me to do a little work

of my own, and he was kind enough to let me share my findings on his blog.

Hunting mlocate.db Files

In Part

3 of the series, David suggested using block group information to find mlocate.db data in disk blocks. My thought was, since the mlocate.db files have such a clear

start of file signature, we could use the sigfind

tool from the Sleuthkit to find mlocate.db files more quickly.

# sigfind

-b 4096 006D6C6F /dev/mapper/RD-var

Block size: 4096 Offset: 0

Signature: 6D6C6F

Block: 100736 (-)

Block: 141568 (+40832)

Block: 183808 (+42240)

Block: 232192 (+48384)

Block: 269312

(+37120)

Here I’m running sigfind

against my own /var

partition. “006D6C6F” is “mlo”

in hex, the first four bytes of a mlocate.db

file (sigfind only allows a max

of 4-byte signatures). I’m telling sigfind to look for this signature at

the start of each 4K block (“-b 4096”). As you can see, sigfind actually located five different candidate

blocks.

What was interesting to me was the blocks are in multiple

different block groups in the file system.

As David suggested in Part 3, the EXT file system normally tries to

place files in the same block group as their parent directory. But when the block group fills up, the file

can be placed elsewhere on disk.

I wanted to make sure that these were all legitimate hits

and not false-positives. So I used a

couple of other Sleuthkit tools and a little Command-Line Kung Fu:

# for

b in 100736 141568 183808 232192 269312; do

echo ===== $b;

blkstat /dev/mapper/RD-var $b | grep

Allocated;

blkcat -h /dev/mapper/RD-var $b | head -1;

done

===== 100736

Not Allocated

0 006d6c6f

63617465 00000127 00010000 .mlo cate ...'

....

===== 141568

Not Allocated

0 006d6c6f

63617465 00000127 00010000 .mlo cate ...'

....

===== 183808

Not Allocated

0 006d6c6f

63617465 00000127 00010000 .mlo cate ...'

....

===== 232192

Not Allocated

0 006d6c6f

63617465 00000127 00010000 .mlo cate ...'

....

===== 269312

Allocated

0 006d6c6f 63617465 00000127 00010000 .mlo cate ...' ....

The blkcat output is showing us that these

all look like mlocate.db files. Since block 269312 is “Allocated”, that must be the current mlocate.db file, while the others are

previous copies we may be able to recover.

Options for Recovering Deleted Files

Let’s review our options for recovering deleted data in older

Linux EXT file systems:

·

For EXT2, use ifind

from the Sleuthkit to find the inode that points to the unallocated block that

the mlocate.db signature sits

in. Then use icat to recover the file by inode number.

·

For EXT3, the block pointer information in the

inode gets zeroed out. My frib

tool uses metadata in the indirect blocks of the file to recover the data

(actually, we could use frib in

EXT2 as well).

Unfortunately, I’m dealing with an EXT4 file system here,

and things are much harder. Like EXT3,

much of the EXT4 inode gets zeroed out when the file is unlinked. But EXT4 uses extents for addressing blocks,

so we don’t have the indirect block metadata to leverage with a tool like frib. You’re left with trying to

“carve” the blocks out of unallocated.

However, our carving is going to run into a snag pretty

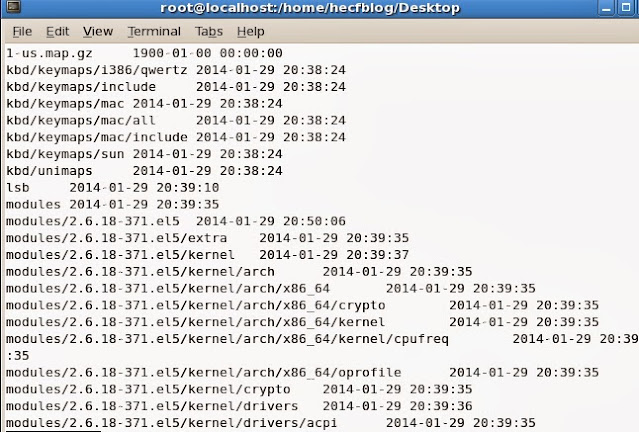

quickly. Take a look at the istat output from the current mlocate.db file:

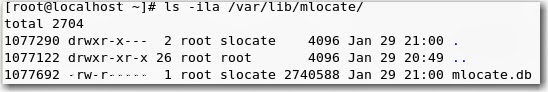

# ls

-i /var/lib/mlocate/mlocate.db

566 /var/lib/mlocate/mlocate.db

# istat

/dev/mapper/RD-var 566

inode: 566

Allocated

[…]

Direct Blocks:

269312 269313 269314 269315 269316 269317

269318 269319

[…]

270328 270329 270330 270331 270332 270333

270334 270335

291840 291841 291842 291843 291844 291845

291846 291847

[…]

The first 1024 blocks of the file are contiguous from block 269312

through 270335. But then there’s a clear gap and we’re starting a new extent

with block 291840. If we had to carve

this file, we’d have a significant problem because the file is fragmented. And unfortunately, all of the mlocate.db files I’ve examined in my

testing contained multiple extents.

We could certainly get useful information from the start of

the file:

# blkcat

/dev/mapper/RD-var 232192 1024 >var-232192-1024

# mlocate-time

var-232192-1024

/etc 2014-03-01

09:47:12

/etc/.java 2013-05-09

16:15:05

/etc/.java/.systemPrefs 2013-05-09 16:15:05

/etc/ConsoleKit 2013-05-09 16:15:05

/etc/ConsoleKit/run-seat.d 2013-05-09 16:15:05

/etc/ConsoleKit/run-session.d 2013-05-09 16:15:05

/etc/ConsoleKit/seats.d 2014-01-31 11:11:35

[…]

/home/hal/SANS/framework/data/msfweb/vendor/rails/actionpack/test/fixtures/addresses/.svn/prop-base 2013-05-09 18:52:29

/home/hal/SANS/framework/data/msfweb/vendor/rails/actionpack/test/fixtures/addresses/.svn/props 2013-05-09 18:52:29

/home/hal/SANS/framework/data/msfweb/vendor/rails/actionpack/test/fixtures/addresses/.svn/text-base 2013-05-09 18:52:30

/home/hal/SANS/framework/data/msfweb/vendor/rails/0.556:avahi-daemon

0.564:bluetooth

0.584:ufw

0.586:smbd

[…]

I use blkcat

here to dump out 1024 blocks from one of the unallocated mlocate.db signatures, and then hit it

with my mlocate-time tool.

Things go great for quite a while, but then we clearly run off the end

of the extent and into some unrelated data.

I was able to pull back almost 6,000 individual file entries from this

chunk of data, but the current mlocate.db

file on my system has over 35,000 entries.

Looking for Another Signature

The fragmentation issue got me wondering if there was some

signature I could use to find the other fragments of the file elsewhere on

disk. Here’s a relevant quote from the mlocate.db(5) manual page (emphasis

mine):

The rest

of the file until EOF describes directories and

their contents. Each directory starts

with a header: 8 bytes for directory time (seconds) in big

endian, 4 bytes for directory time (nanoseconds) in big endian (0 if

unknown, less than 1,000,000,000), 4 bytes

padding, and a NUL-terminated path name of the the directory. Directory contents, a sequence of file

entries sorted by name, follow.

Examining several mlocate.db

files, the 4 bytes of padding are nulls, and the directory pathname begins with

a slash (“/”). So “000000002f”

is a 5-byte signature we could use to look for directory entries in mlocate.db file fragments.

sigfind

doesn’t help us here, because it wants to look for a signature at the start of

a block or at a specific block offset.

Since I needed to look for the signature anywhere in a block, I threw

together a quick and dirty Perl script for finding our signature in a disk

image. I haven’t done a significant

amount of testing, but early indications are:

·

False positives can be a problem—a series of

nulls followed by a slash is unfortunately common in data in a typical Linux

file system. In order to combat this,

I’ve added a threshold value that requires a block to have a minimum of 6

instances of our signature before being reported as a possible mlocate.db chunk (the threshold value

is configurable on the command-line).

·

False-negatives are also an issue. If a directory contains a large number of

files (think /usr/lib), then the

directory contents may span multiple blocks.

That means one or two blocks with no instances of our “start of

directory entry” signature, even though those blocks actually are part of a mlocate.db fragment.

That being said, the script does do a reasonable job of

finding groups of blocks that are part of fragmented mlocate.db files.

With a little manual analyst intervention, it appears that it would be

possible to reconstitute a deleted mlocate.db

from an EXT4 file

system, assuming none of the original blocks had been overwritten.

Frankly, our signature could be a little better too. It’s not just “four nulls followed by a

slash”, it’s “four nulls followed by a Linux path specification”. Using a regular expression for this would

likely reduce the false-positives problem plaguing the current script.

Wrapping Up (For Now)

I’ve had fun getting a deeper understanding of mlocate.db files and some of the

challenges in trying to recover this artifact from unallocated. But I still see some open questions. Can we

improve the fidelity of our file signature to eliminate false-positives? And given that there are chunks of at least five

different mlocate.db files

scattered around this file system, would we be able to put the correct chunks

back together to recover the original file(s)?

Perhaps David or somebody else in the community would like to tackle

these issues.

Also Read: